Abstract

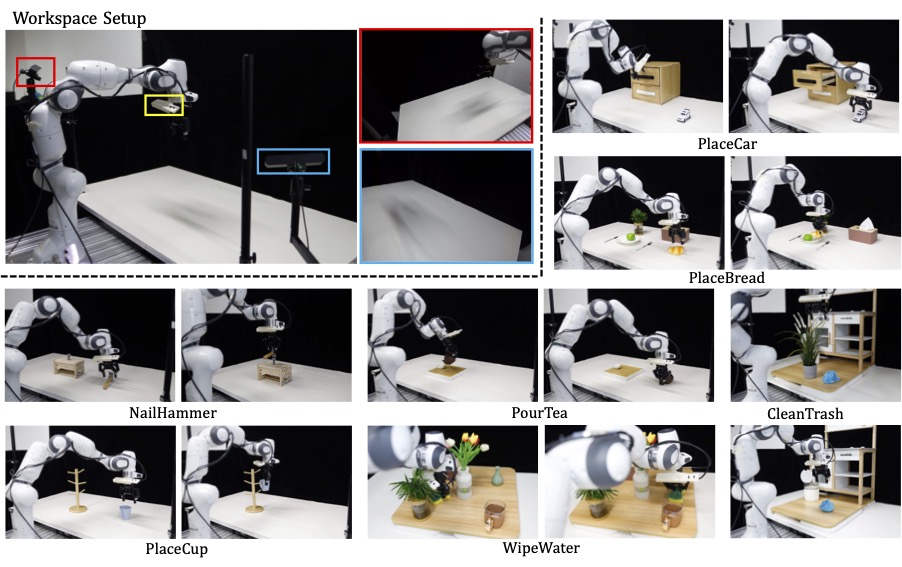

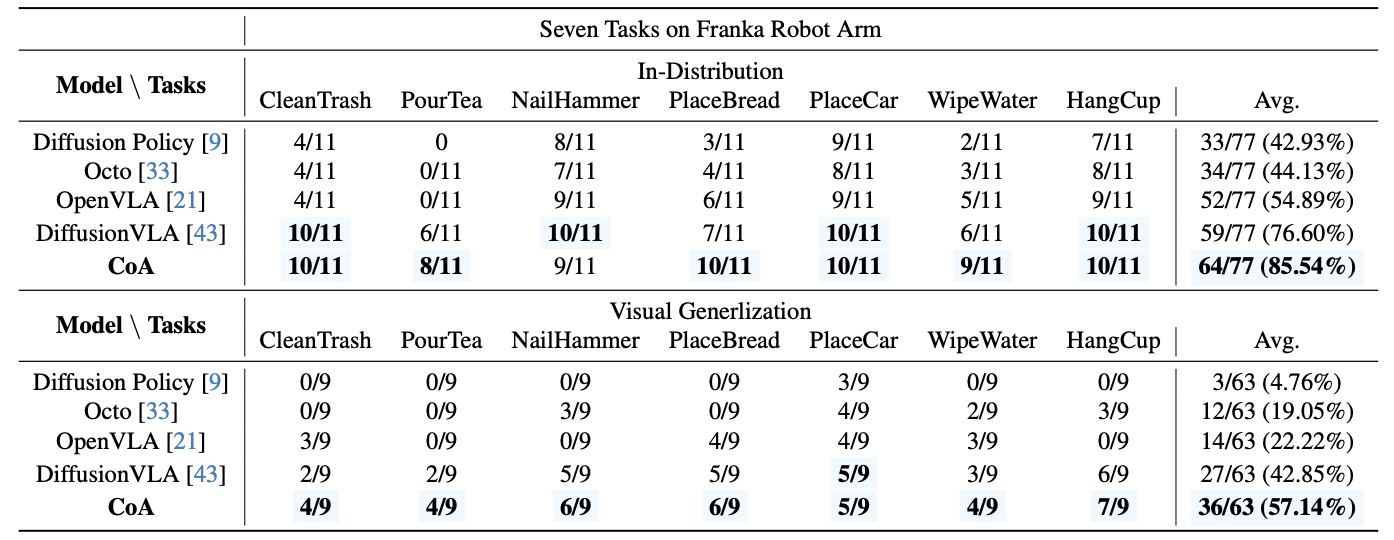

Robot foundation models, particularly Vision-Language-Action (VLA) models, have garnered significant attention for their ability to enhance robot policy learning, greatly improving robot's generalization and robustness. OpenAI's recent model, O1, showcased impressive capabilities in solving complex problems by utilizing extensive reasoning chains. This prompts an important question: can robot models achieve better performance in multi-task, complex environments by reviewing prior observations and then providing task-specific reasoning to guide action prediction?In this paper, we introduce Chain-of-Affordance (CoA), a novel approach to scaling robot models by incorporating reasoning in the format of sequential robot affordances to facilitate task completion. Specifically, we prompt the model to consider the following four types of affordances before taking action: (1) object affordance — what object to manipulate and where it is; (2) grasp affordance — the specific object part to grasp; (3) spatial affordance — the optimal space to place the object; and (4) movement affordance — the collision-free path for movement. By integrating this knowledge into the policy model, the robot gains essential context, allowing it to act with increased precision and robustness during inference. Our experiments demonstrate that CoA achieves superior performance than state-of-the-art robot foundation models, such as OpenVLA and Octo. Additionally, CoA shows strong generalization to unseen object poses, identifies free space, and avoids obstacles in novel environments.

Generalization Experiments of our CoAVLA

BibTeX

@article{li2024coa,

title={Improving Vision-Language-Action Models via Chain-of-Affordance},

author={Li, Jinming and Zhu, Yichen and Tang, Zhibin and Wen, Junjie and Zhu, Minjie and Liu, Xiaoyu and Li, Chengmeng and Cheng, Ran and Peng, Yaxin and Feng, Feifei},

journal={arXiv preprint arXiv:None},

year={2024}

},

@article{wen2024diffusionvla,

title={DiffusionVLA: Scaling Robot Foundation Models via Unified Diffusion and Autoregression},

author={Wen, Junjie and Zhu, Minjie and Zhu, Yichen and Tang, Zhibin and Li, Jinming and Zhou, Zhongyi and Li, Chengmeng and Liu, Xiaoyu and Peng, Yaxin and Shen, Chaomin and Feng, Feifei},

journal={arXiv preprint arXiv:None},

year={2024}

},